Industry Perspective With SpaceX Lead Software Engineer Stephen Jones

Stephen Jones is a lead software engineer at SpaceX and former CUDA architect at NVIDIA

For this post, Stephen Jones gives his perspective on aspects of technology of great interest to Rescale’s users. As a major contributor to CUDA, many of Rescale’s users have run analyses taking advantage of his work on GPGPU technology, and others have created their own codes using CUDA libraries. From a personal perspective, having returned to work with engineering software after a number of years in general software engineering, I decided it would be interesting to ask Stephen some questions on technological advances of recent years, and how he sees future trends.

To get the ball rolling, I started with the evolution of parallel computing:

Stephen Jones: Around 10 years ago, all computing became parallel computing. Processors stopped getting individually faster, and Moore’s Law was continued through adding more processors (consumer processors all have at least 4 cores, and servers can have 16 or more – any non-parallel program is therefore using no more than 25% of the machine). I think a key effect arises because parallel programming is different to serial programming in several fundamental ways which make it much more difficult. I expect in future (sic) to see parallel programming be done by tools (compilers, libraries, automated programming languages) not by humans, and that this will mark a sea-change in the way all programming is approached.

At Rescale, we have a diverse customer base. Many of our partners in industry or academia work with High Performance Computing (HPC) clusters on-premise. I asked Stephen what HPC means to him:

Stephen Jones: I see this as a general term covering the cutting edge of computing technology. The universe is complex, and we are very far from being able to model it precisely; therefore, we can always find tasks for ever-larger computers. What happens is that, as technology advances what a computer can do, it unlocks new problems which we couldn’t attack before. HPC will always exist, and will always be a niche market. On the other hand, it won’t soon be supplanted by “powerful-enough” desktops because of the aforementioned complexity of the universe. It does act as a very interesting technology incubator, in terms of both hardware and software.

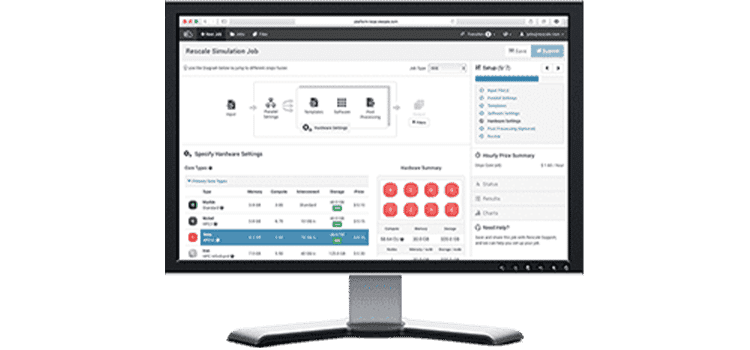

Engineers and scientists worldwide are using the Rescale platform to complement and extend the resources their organization can provide. I asked Stephen about how he sees the utilization of cloud technologies in HPC:

Stephen Jones: This is one of the great enablers of the last 10 years. Amazon’s cloud, in particular, is game-changing and (to my mind) one of the most amazing things in the computing world. It is now acceptable, when trying to get your startup funded, to say “my infrastructure is AWS so we’re not spending money on hardware”. What’s particularly interesting is that it enables HPC as well as basic IT infrastructure, so you begin to see startups doing new things with massive-scale computing which previously were out of reach of such small players. In effect, we can have 100x as many people developing for HPC as ever in the past, and that has to produce new interesting applications.

In my role as an application engineer here at Rescale, I recently built and installed some open source packages that take advantage of CUDA and NVIDIA hardware on the Rescale platform. I asked Stephen about his personal experience working on CUDA:

Stephen Jones: It’s obviously hard for me to be objective about this, because I was the architect of CUDA for a number of years. One thing you see in parallel and HPC programming languages is a very “lowest common denominator” approach – nobody wants to invest in highly complex code which will only run on a single machine and will prevent them from buying a different one. The result is the “designed by a committee” problem, coupled with a “master of none” result. CUDA is interesting because it intentionally goes to the other extreme – it works only on a single type of machine but is therefore able to push the boundaries because it is not limited by trying to please everyone. It is doing well right now because the NVIDIA GPU is widely prevalent in HPC; the result is that CUDA investigates a lot of new programming model aspects first. Many CUDA features find their way into other languages in one form or another (OpenCL, OpenMP, Renderscript) because they’re found to be useful. The thing which really enables CUDA is that the language designers can get hardware support from the chip designers (because they’re in the same company), and so push new limits which just are not open to independent language designers. There’s a lot of innovative stuff in CUDA, and even more coming in the future, as a result of this close association.

One thing I’ve noticed with new codes is the productivity enhancement researchers are achieving by the judicious use of Python in scientific projects. I asked Stephen what combination of tools (that may include languages) he would recommend to scientists or engineers hoping to achieve maximum productivity so they can do their actual work:

Stephen Jones: I’m a believer in always writing software in the highest-level language and the fewest number of lines possible. Even in high-performance code, often just 10% of the lines of code are consuming 90% of the cycles. If this 10% implements a well-known algorithm (linear algebra, fourier transform, etc.) then you can often use an off-the-shelf implementation which someone else has fine-tuned.

This means you can often get away with doing *everything* in a high-level language, such as SciPy or NumPy, and use the underlying libraries to do the heavy lifting. I’d say you’d be crazy not to – it leads to more maintainable code, with fewer lines and fewer bugs, and most importantly for industry: you can much more easily hire people who know python, than people who know arcane low-level parallel languages. Your code base then has much better longevity.

Where things get interesting is when the CPU-intensive operations are not standard algorithms. You then need to actually implement them yourself, and once again I’d recommend the highest-level language you can get away with. This involves thinking about the maintainability<->performance balance: you might want to accept not reaching peak performance in exchange for writing more maintainable, future-proof code. NumPy, for example, lets me do some CUDA-related parallelisation (sic) in a Python framework, with a very respectable speedup for very little effort.

At SpaceX, I target about 75% of peak performance on the grounds that the last 25% will make the code much harder to develop and – most importantly – much less future-proof. It’s simply not worth that extra 25% if I have to recode it for new architectures every few years. Admittedly, we don’t use Python for our performance code, but we do use it for a host of things which would be way too complicated in C++.

As for development tools – I’m always looking at what language and/or platform has the best diagnostic tools. Debuggers, profilers, and analysis can save me literally 50% of my development time. So a combination of that and of a high-level language is the first thing I go to. As you can tell, I’m a fan of Python, but it really will depend on the use-case. Matlab also has excellent tools, though it comes with framework lock-in.

A word on other types of language. Haskell, in particular, is getting a lot of traction these days. As a functional programming language, it parallelises (sic) much more naturally than an imperative language and it’s looking interesting. Academia is very interested in it, but I think that the talent-pool is too small for me to use it in industry: hiring good people is hard enough for Python, let alone for C.

I followed up by asking what he saw as the future of FORTRAN, a language still frequently used in engineering analyses.

Stephen Jones: It’ll go away as computers become less able to run it. It is not intrinsically parallel, so you need to wrap a framework such as MPI around it. I think that larger (exascale) computers will be increasingly difficult to program with MPI, and that this will mark the end of FORTRAN. Unlike C, FORTRAN has no presence in low-level programming so it’ll fade as the dusty decks are finally rewritten.

A final word, looking into my crystal ball: I think the future of programming lies with software-writing-software. That is to say, I’ll write a program which writes the programs for me. Compilers already do a lot for us; high-level frameworks like Ruby manage all sorts of complexity for web programming; auto-parallelisation (sic) is a big buzzword right now (though it’s not here yet). Computers are going to become increasingly hard to program, and we’ll only be able to do it with the help of other computers…

Moving on to the topic of working with what I like to call “real engineers” (disclosure, my first engineering job was in a ship-repair yard), Stephen was equally forthcoming.

Stephen Jones: It is really interesting, to work as a software guy in a pure mechanical-engineering environment. A computer is a tool like any piece of lab equipment, and nobody could imagine doing engineering without one; however, non-software-engineers at all engineering companies I’ve seen use them like tools (which is to say in a fairly software-unsophisticated way).

Finally, software engineering and its relationship with engineering is a topic that really interests me. I asked Stephen for his thoughts on this.

Stephen Jones: This is another huge item. I live it every day at SpaceX, trying to bridge that gap. I went to a conference a few months ago on engineering simulation and met a dozen people who all had surprisingly similar stories to tell (as did I) – the lack of understanding about software is widespread, and a real handicap for engineers. We need to educate engineers far better than we do in use and programming of computers – it’s not like a drill or a saw that you can just learn to use easily on the job. Those companies that spend the time to provide this education gain clear benefits in engineering innovation and productivity. This goes for all sciences, by the way, not just engineering. Working in HPC for NVIDIA, I met countless brilliant physicists, chemists, and biologists who were struggling to do their cutting-edge science with these massively complex supercomputers. The most successful ones were the ones who had actively learned to program, as against just muddled through (sic). In many cases, the less experienced physicist does better work because they can use the computer more effectively. There is undeniably an attitude – in both science and engineering – that software is “less pure”, but I suspect this will prove to be generational as people now grow up with computers at their fingertips from childhood.

Many thanks to Stephen for his unique perspective. At Rescale, we will continue work to enable scientists and engineers to achieve their goals by providing the scalable computing resources they need.