Leveraging Rescale for High-Order CFD Simulation

Background

Computational Fluid Dynamics (CFD) has undergone immense development as a discipline over the past several decades and is used routinely to complement empirical testing in product design of aircraft, automobiles, microelectronics, and several other industries. The vast majority of commercially available fluid flow solvers in use today exploit finite difference, finite volume, or finite element schemes to achieve second-order spatial accuracy. These low-order schemes have become both robust and affordable due to considerable efforts on the part of their original developers while offering suitable accuracy for many flow problems.

While second-order methods have become widespread throughout both industry and academia, there exist several important flow problems–requiring very low numerical dissipation–for which they are not well suited. Most of these flow problems involve vortex dominated flows as well as problems in aeroacoustics, and solutions to these fluid flow problems are otherwise intractable without the aid of unstructured high-order methods.

Other situations arise where second-order accuracy may not lead to an acceptable overall solution. For example, a suitable solution error in one variable (e.g. lift or pressure drag) may lead to an unacceptable solution error for another (e.g. shear stress). In short, there exist several fluid flow problems today where it may be advantageous to use a high-order spatial discretization. These higher order schemes may offer increased accuracy for a comparable computational cost.

Analysis Description

To help demonstrate running unstructured high-order simulations across multiple GPU co-processors using Rescale’s cloud-based HPC infrastructure, the computation of flow over a NACA 0012 airfoil is simulated for viscous subsonic flow using PyFR1. While the simulation of laminar flow over a 2D airfoil is by no means novel, configuring the discretized computational domain with curved mesh elements and solving via Huynh’s2 Flux Reconstruction framework together with extension to three dimensions and sub-cell shock capturing schemes3 describe the current state-of-the-art of CFD.

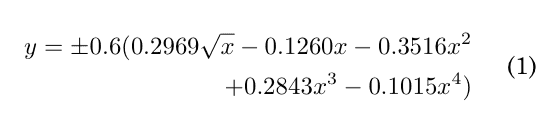

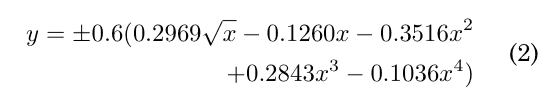

The governing equations are the Navier-Stokes equations with a constant ratio of specific heats equal to 1.4 and Prandtl number of 0.72. The viscosity coefficient is computed via Sutherland’s law. Here only a single flow condition is considered with M0= 0.5 and α = 1◦ . The Reynolds number, Re = 5000, is based on the airfoil’s chord length. The NACA 0012 airfoil is defined in Eq. (1) as:

where x ∈ [0, 1]. The airfoil defined using this equation has a finite trailing edge of 0.252%. Various ways exist in the literature to modify this definition such that the trailing edge has a zero thickness. Here, one which modifies the x4 coefficient is adopted, such that

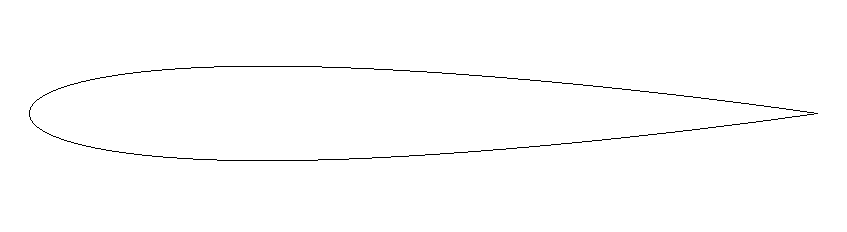

The airfoil shape is depicted in Fig. (1) below.

The farfield boundary conditions are set for subsonic inflow and outflow, respectively; and the airfoil surface is set as a no-slip adiabatic wall.

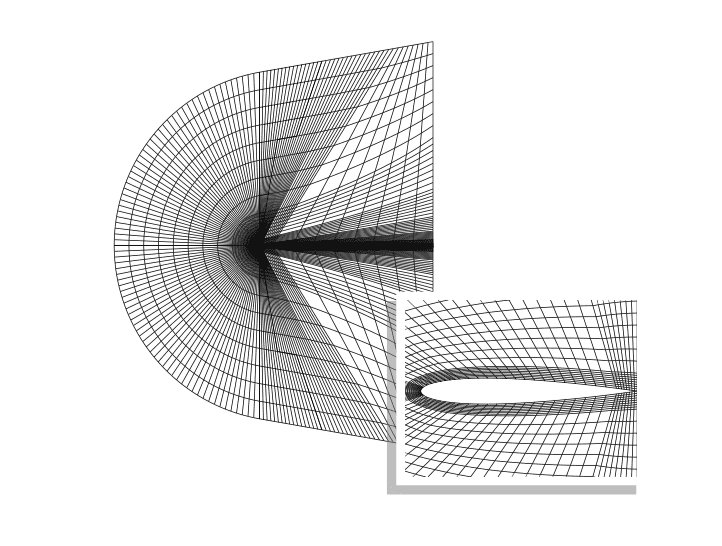

A mesh consisting of 8,960 quad elements is used to define the fluid domain. Third-order curvilinear elements are generated using Gmsh4, an open source three-dimensional finite element meshing package developed by Christophe Geuzaine and Jean-François Remacle. The farfield boundary is a circle centered at the airfoil mid chord with a radius of more than 1,000 chord lengths away from the actual airfoil boundary in order to minimize issues associated with the effect of the farfield boundary on lift and drag coefficients, as illustrated in Fig. (2)

Simulation Solution

PyFR is one of a select few open source projects that implements an efficient high-order advection-diffusion based framework for solving a range of governing systems on mixed unstructured grids containing various element types. PyFR is undergoing active development by a team of researchers at Imperial College London promoting Huynh’s Flux Reconstruction approach. PyFR leverages CUDA and OpenCL libraries for running on GPU clusters and other streaming architectures in addition to more conventional HPC clusters.

Rescale has recently introduced our GPU “Core Type” which allows end-users to configure their own GPU clusters to run their simulations on demand across multiple NVIDIA Tesla co-processor cards. This enables users to decompose their large computational discretized domains into smaller sub-domains with each running concurrently on its own dedicated Tesla co-processor.

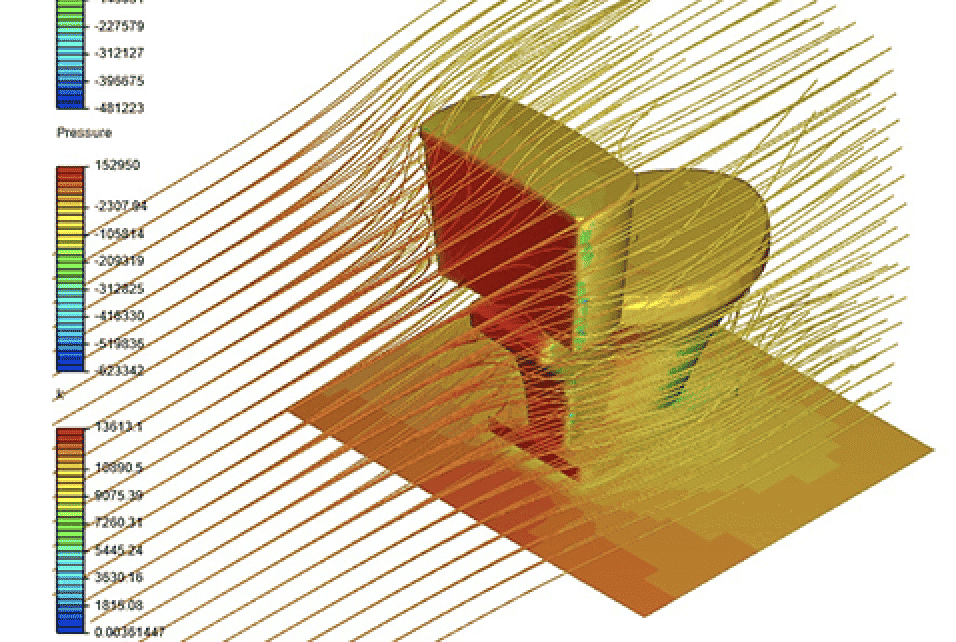

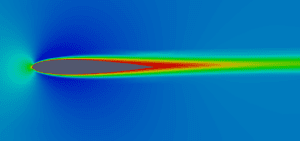

The discretized computational domain shown in Fig. (2) was decomposed into four parts and the simulation run on Rescale using a GPU cluster consisting of two nodes and four NVIDIA Tesla co-processors. Distributing the simulation in this manner is purely illustrative as the simulation only requires 65 MB of memory which can run entirely on a single GPU. A fourth-order spatial discretization (p4) solution was advanced in time via an explicit Runge-Kutta time integration scheme for 20 seconds using a time step of 5.0e-05 seconds (i.e. 400,000 total steps).

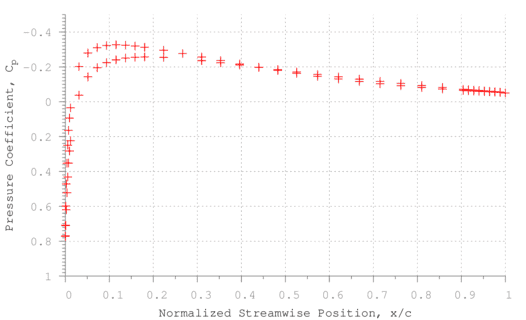

Figures (3 & 4) show plots resulting from the simulation of the Mach contours and pressure coefficient, Cp distribution around the surface of the airfoil, respectively.

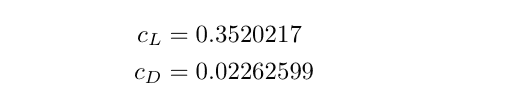

The coefficients of lift and drag were computed from the simulation’s results as:

with corresponding errors of 9.3876e-06 and 5.9600e-08, respectively. Here the error is calculated from a reference solution that was run using 143,360 quad elements.

Summary

It has been shown5 that the Flux Reconstruction algorithm used in PyFR can recover other well-known high-order schemes. As a result, it provides a unifying approach–or framework–for unstructured high-order CFD; one which is also particularly well-suited to run on GPU and other streaming architectures. As current advances in CFD become more mainstream, we may see a shift in the types of computing hardware on which these types of simulations are run.

Rescale has positioned itself at the forefront of these advancements by enabling users to provision their own custom GPU clusters to run a variety of scientific and engineering focused software tools which leverage these architectures with only a few simple clicks of a mouse in an easy-to-use, web-based interface.

Click here to download a PDF copy of this article. Give PyFR a try and run your own simulation on Rescale today.

2 H. T. Huynh, ”A reconstruction approach to high-order schemes including discontinuous Galerkin for diffusion.” AIAA Paper 2009-403. Print.

3 P. Persson and J. Peraire, ”Sub-cell shock capturing for discontinuous Galerkin methods.” AIAA Paper 2006-112. Print.

4 C. Geuzaine and J.-F. Remacle, ”Gmsh: a three-dimensional finite element mesh generator with built-in pre- and post-processing facilities.” International Journal for Numerical Methods in Engineering 79 (2009): 1309-1331. Print.

5 Vincent, P.E., P. Castonguay, and A. Jameson. ”A New Class of High-Order Energy Stable Flux Reconstruction Schemes.” Journal of Scientific Computing. Web. 5 Sep. 2010.