Exploring the World of High Performance Computer Chips: Speed, Cost, and Energy Efficiency

A look at current trends in semiconductor technology and the future of high performance computing

High performance computing is an essential tool for scientific research, engineering, and product design.

High performance computing (HPC) systems perform complex calculations and simulations, often involving massive amounts of data. However, building and operating HPC systems can be expensive, and energy consumption is a major concern. The new wave of specialized computer chips offer a way to improve the performance and energy efficiency of HPC systems while reducing costs.

The limitations of traditional CPU (central processing unit) architectures have led to the development of specialized computer chips for a wide range of applications, including HPC. The challenge, however, is that engineering and scientific computing is becoming increasingly complex.

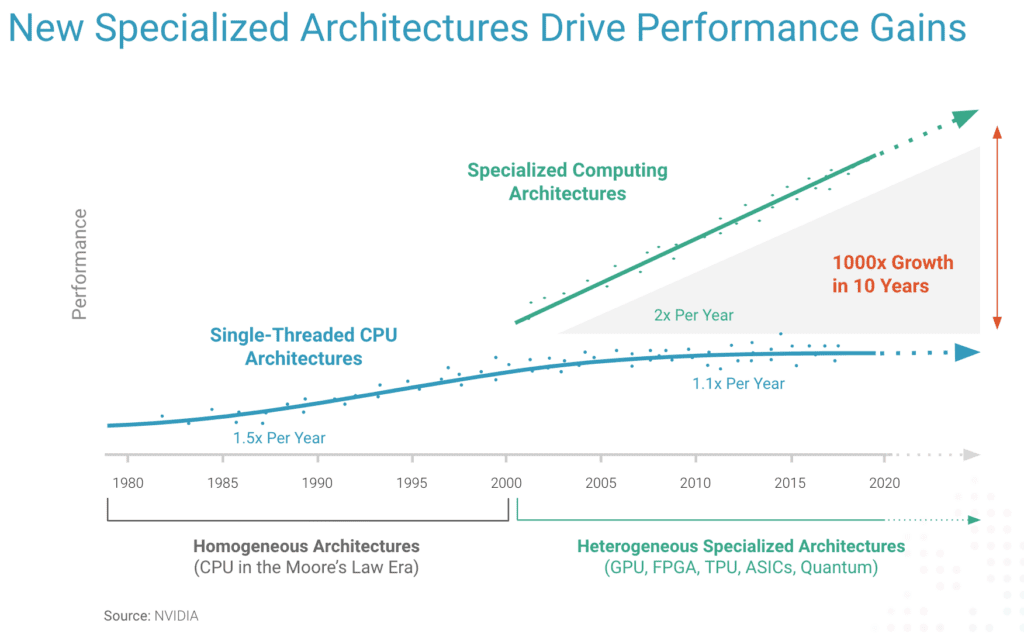

The leveling off of Moore’s Law and the accelerating adoption of Arm-based architectures by a growing number of non-traditional chip makers is completely transforming the semiconductor market. Even cloud providers such as AWS, Microsoft Azure, and Google Cloud are crafting their own chips.

As a result, the variety of semiconductors has exploded. The number of specialized chips using graphics processors, tensor processors, and other variants has increased 1,000 percent in 10 years. In 2022 alone, almost 400 new hardware options were introduced by cloud service providers. Currently, organizations can choose from more than 1,500 different instance types.

These new types of chips are often designed for specific tasks, such as graphics processing, machine learning, and artificial intelligence. Specialized chips can often handle complex calculations more efficiently than traditional CPUs, with significantly lower power consumption and cost. As a result, these new types of chips are also proving to be excellent for HPC for R&D.

New Players in the Semiconductor Market

One of the most prominent players in the specialized chip space is NVIDIA, particularly for GPUs (graphical processor units). The company has developed specialized GPUs for a wide range of applications from gaming to scientific computing.

In recent years, NVIDIA has also made significant investments in artificial intelligence (AI) and machine learning, developing specialized hardware like the Tensor Core GPU. These chips are designed to handle the complex calculations required for AI applications, and they are driving significant performance improvements in these areas.

In addition to NVIDIA, major cloud service providers like Google Cloud, Amazon Web Services (AWS), and Microsoft Azure have also developed their own specialized chips for HPC applications.

Google has created custom AI chips called TPUs (tensor processing units). These chips are designed to handle machine learning workloads in Google’s cloud computing services, and they have already shown significant performance improvements over traditional CPUs. TPUs are optimized for the TensorFlow framework, which is widely used in machine learning applications.

AWS has also entered the semiconductor business, developing its own custom Arm-based chips for use in its cloud computing services. These chips are driving performance, cost, and energy improvements for cloud computing. The AWS Graviton processor, for example, is optimized for scale-out workloads and is designed to provide a cost-effective alternative to traditional x86-based processors.

FPGAs (field-programmable gate arrays) are another type of specialized chip that are becoming increasingly popular in HPC applications. FPGAs are programmable chips that can be configured to perform specific tasks, making them well-suited to handle the complex calculations required in HPC workloads. They are also highly energy efficient, with significantly lower power consumption than traditional CPUs.

FPGAs excel with certain types of calculations, such as those involved in image processing and data compression. However, they can be more difficult to configure than other chip options, and they may not be the best choice for all HPC workloads.

Microsoft is using FPGAs to build its own custom AI chips for use in its cloud computing services. Project Brainwave is a deep learning platform that uses FPGAs to provide high-performance, low-latency processing for machine learning workloads. Microsoft has also developed a custom chip called the Holographic Processing Unit (HPU), which is used in its HoloLens mixed-reality headset.

Another player in the specialized chip space is Intel, with its Xeon Phi line of processors. These chips are designed specifically for HPC applications, with high core counts and support for vector processing. They are also designed to be energy efficient, with lower power consumption than traditional CPUs.

The use of specialized chips from traditional and new vendors can offer significant benefits for digital research and engineering tasks. They are also often available on a pay-as-you-go basis, which can make them more cost-effective for certain workloads.

However, to get the most value out of today’s new computer chips from cloud service providers, organizations might need to use specialized software and hardware components to take advantage of a chip’s processing capabilities.

Chip Choices: How to Power Your HPC

While opening up tremendous potential to revolutionize how scientific, engineering, and design breakthroughs happen, the proliferation of architecture choices and cloud services is creating an overwhelming and ever-changing number of possibilities that have significant cost, performance, and sustainability trade-offs, making it essential that organizations choose wisely.

Getting it right means accelerating innovation while controlling costs. Getting it wrong means broken budgets and bottlenecks to innovation.

For example, GPUs are well-suited to handle certain types of calculations, such as those involved in graphics processing and machine learning. They can provide significant performance improvements over traditional CPUs, with lower power consumption and cost. However, they may not be the best choice for all HPC workloads.

The choice of chip also depends on the software and hardware ecosystem surrounding an HPC system. Different chip architectures require different software and hardware components, and this can influence the cost and complexity of an HPC system. This could include the need for specialized expertise to develop and maintain these systems for supporting certain kinds of computer chips.

Despite these challenges, the evolution of the semiconductor industry will continue to drive the innovation of new, specialized computer chips and architectures that are even more effective at powering high performance computing for R&D.

To learn more about how to take advantage of the latest advances in high performance computing hardware, contact a Rescale expert today.