Why Rescale is a Great Match for Researchers in Higher Education and Government Labs

The way we conduct research and make new discoveries in nearly every discipline relies more and more on computers. As machine learning, data science, new computationally intensive methods, and analysis of ever-larger data sets become commonplace, researchers increasingly need access to high performance computing (HPC) resources that are readily available and easy to use. A current example of this is the use of computational modeling techniques to combat COVID-19 from multiple angles, from screening potential drug candidates to modeling the spread of aerosol droplets. HPC is also used in many diverse research areas that directly benefit humanity, such as weather and climate prediction, improving the designs of cars and airplanes, preventing criminal activity, national defense, and even recently in development of new energy sources. As a result, publicly funded research using HPC in government institutions and higher education research is easily the largest HPC category by industry spend.

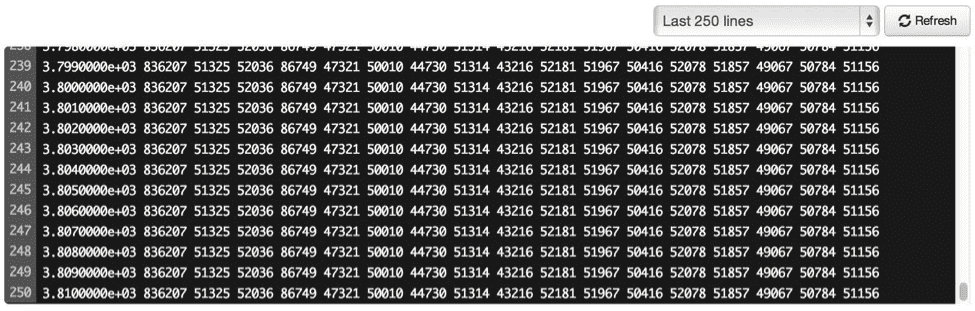

In my previous academic career I was funded by the National Institutes of Health (NIH) to develop novel methods to track changes to cognition that occur with development and neurodegenerative disease, using brain images obtained through magnetic resonance imaging. Because these brain data are noisy and require significant computational power to process and analyze, lack of access to large and stable computing infrastructure limited the size and impact of the research problems I and my colleagues could tackle. In life sciences companies, every dollar invested in HPC generates $504 in additional sales, but the benefit of HPC investment in higher education research is not easy to calculate. While working with a colleague on a large-scale NIH grant proposal I realized that we simply did not have the computing infrastructure to analyze, store, and share terabytes of data collected in the multi-site studies that represented the future. We also lacked security mechanisms to properly protect data for identifiable individuals while still making the data usable to researchers.

This made cloud computing extremely appealing; it held the promise of allowing me to script my scientific workflows and even the computers to run them at any scale I needed and to collaborate with colleagues without moving, copying or editing datasets to remove restricted information. The problem was that the IT learning curve for cloud was very steep, even for the technical support staff. Although the tools were all there to develop an analysis and data sharing platform, none of us had the time, money, or skills to build it. At the very least, everyone needed an “easy button” to abstract away IT complexity.

Beyond Cloud Infrastructure: Meeting the Needs of Public Sector Cloud Practitioners

Fast forward a few years, and many cloud providers and partners have solutions that make it easier to create a cluster with the click of a button. However, in my subsequent roles (on the solution provider side) working with IT personnel who support researchers, I found that this was not enough as either a supplement or replacement to on-premise high performance computing. Most institutions are used to a specific way of managing HPC systems that has been in place for years, where they have worked out how to provide things like support and security, chargeback, and training. Cloud HPC paradigms allow users to scale out and leverage architectural flexibility, but they disrupt existing HPC ecosystems. Instead of paying a fixed cost for hardware, users pay for the resources they use, leading to unpredictable spend. Users can always have access to the latest hardware, but then have difficulty choosing their architecture, installing new drivers, middleware and applications. It’s not easy to decide at an institutional level how to integrate HPC resources with on-premise Differences such as these pose new challenges to existing institutional systems for managing HPC.

Four specific challenges of cloud HPC are security/compilance, management, support, and maintaining the user experience.

Security & Compliance

Sensitive research data can be associated with significant risks or harm if exposed. These risks may run the gamut from revealing a health condition for an individual that might harm their chances of employment to exposing research on novel defense systems that could be exploited by bad actors. There is a financial risk to losing any data that cannot easily be recreated: universities in particular are some of the biggest targets for ransomware attacks. To mitigate these risks, research of different types and on different data must meet specific compliance requirements. The more responsibility for maintaining security and compliance an HPC solution can take on, the easier it is to integrate it.

HPC Management

In many government and higher education environments, users have very different application and system needs. IT needs to have centralized visibility and the ability to support all of these varied activities, which is challenging if users have different accounts with multiple cloud providers. Institutions also want to put hard limits on spending, while also ensuring that there is remaining budget to get their work done.

HPC Support

As soon as you give users access to powerful new systems on cloud infrastructure, you need to help them run their scientific applications. This is not a tiny amount of work. Cloud HPC systems are complex beasts with different network fabrics, processor types, drivers and message passing libraries and middleware – when something unexpected happens (from poor job performance to job failure), the end users can’t handle the problems themselves. This is in contrast to HPC systems that institutions maintain, whether on-premise or in the cloud. Do-it-yourself HPC support means limiting choices of software and hardware to make support tractable, and maintaining a specialized staff to do so.

HPC User Experience

Even when it is easy to create HPC cloud infrastructure, there are challenges with supporting a positive user experience. Users are used to interactive, graphical environments on their workstations and this can be difficult to support on HPC systems. They struggle with selecting the optimal core types and parallelism for their applications. IT often wants an application programming interface to make it easy to integrate the user experience with their existing infrastructure. Most users want resources to be accessible from everywhere and to be able to collaborate easily with remote colleagues.

How Rescale Addresses Research Needs

Rescale is developed as a flexible cloud-enabled platform that makes it easier to run any science or engineering application on a wide selection of the latest specialized computing architectures. It is a browser-based application that automates the full stack of HPC software, infrastructure, and middleware on any cloud service – this packaging of managed services is often referred to as HPC as a service. Rescale offers over 1000 applications and allow users to bring their own. Rescale is currently used to accelerate science, engineering, and R&D in virtually every industry, however, it has key features that address problems faced by researchers in higher education and government labs as described above.

First, Rescale supports a variety of security and compliance standards, including SOC-2 Type 2, ISO 27001, ITAR, DFARS, and GDPR. Of special note, the Rescale ScaleX Government platform is the first and only hybrid cloud HPC platform to secure FedRAMP Moderate Authority to Operate. This makes it significantly easier for institutions to implement secure and compliant cloud environments.

Rescale is an enterprise application with organizational and workspace controls, allowing administrators to do things like limit software applications or Coretypes for specific departments, set monthly budgets, and monitor activity across multiple cloud providers. Rescale’s performance optimization tools even use trained machine learning models to make recommendations about what core types would be more efficient or less costly to run a job.

Rescale scales support for HPC, to support the scalability and flexibility of cloud HPC architectures. If there is a problem that occurs anywhere between the cloud architecture and the application, Rescale has the expertise to fix it. Without support, institutions cannot help users take advantage of the cloud by running more applications, new computing infrastructure, and more jobs. Rescale recently announced our Slurm connector, which allows users to leverage Rescale through the familiar Slurm queueing interface. This capability makes it even easier to integrate with existing HPC systems, providing bursting capabilities with no maintenance of additional infrastructure.

Finally, Rescale was designed with the user-experience in mind, so that nearly anyone can submit jobs without having to worry about modifying the underlying commands or software to reflect the underlying hardware. We recently launched workstations to provide a seamless graphical front end to an HPC cluster. And we recently announced the world’s first Compute Recommendation Engine to help users select pre-configured Coretypes that can optimize cost and/or performance. Users are able to identify the best resources for their problems, which allows them to use research funding most efficiently to meet their goals.

Today, scientists, researchers, and engineers from public labs, agencies, and contractors are conducting R&D on Rescale to accelerate their innovation initiatives. From investments in new energy sources and critical infrastructure to understanding changing climate events and public health research, Rescale enables fast and efficient deployment of grant funding coupled with an intuitive user experience for anyone to get started today. Thankfully the days of waiting in line for computing capacity and expensive upfront investments are behind us. I’m excited to continue seeing more impact from the latest R&D technologies in the hands of the brightest minds trying to solve the worlds most important problems.

To get started and learn more about Rescale for Public Sector organizations request a demo here. And if you are a student, researcher, or educator in higher education, Rescale may be able to provide you with free computing resources for your next project by requesting a demo here.