Revolutionizing AI Engineering: Exploring Generative Design and Physics Informed Neural Networks

Learn how researchers and engineers are using AI to further accelerate their ability to make breakthrough discoveries and innovative designs

Artificial Intelligence (AI) has revolutionized many industries, including engineering. It is providing all-new approaches to complex problem solving. One of the latest innovations in AI engineering is the use of generative design and physics informed neural networks (PINNs).

This exciting technology combines the power of deep learning with the fundamental principles of physics to create models that can generate optimized designs and solve complex engineering problems. In this blog, we will explore the world of AI engineering, generative design, machine learning (ML), and PINNs, and how they are transforming the way engineers approach design and problem solving.

We will delve into the underlying concepts, applications, benefits, and challenges of this emerging technology, and discuss its potential impact on the engineering industry.

What Is AI and ML Engineering?

AI and ML engineering involves systems that can perceive and reason about the world, interact with humans or other agents, and perform tasks that would normally require human intelligence. This can involve developing natural language processing (NLP) systems, computer vision algorithms, or intelligent model based systems engineering decision-making processes.

Machine Learning (ML)

ML engineering, on the other hand, is concerned with the development and deployment of machine learning models that can learn from data and make predictions or decisions based on that learning. This can involve building and training models using a variety of machine learning algorithms, optimizing their performance, and deploying them in production environments.

Overall, AI and ML engineering require a deep understanding of both computer science and statistics, as well as expertise in software engineering, data science, and cloud computing. These skills are in high demand as organizations across industries seek to leverage the power of AI and ML to improve their operations and gain a competitive edge.

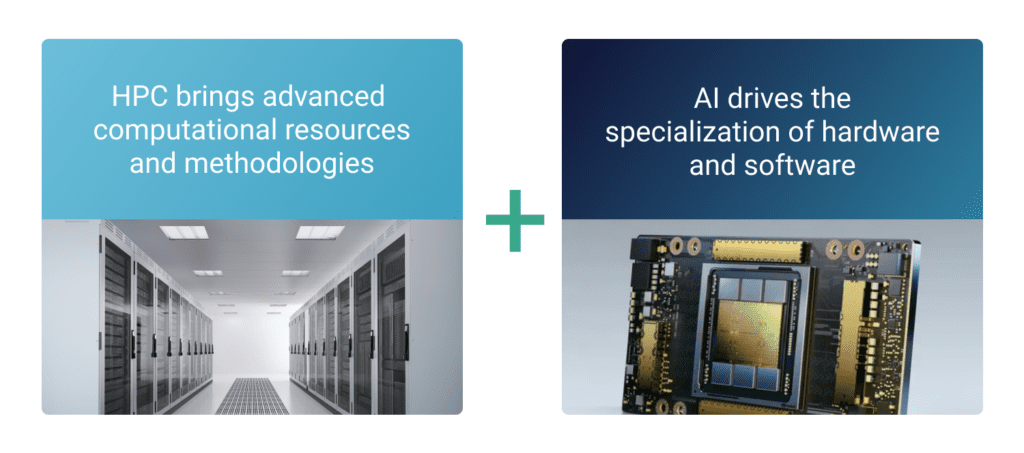

The Convergence of High Performance Computing (HPC) and Artificial Intelligence (AI)

The convergence of high performance computing (HPC) and artificial intelligence (AI) refers to the growing integration of these two technologies to support faster and more powerful AI applications.

HPC involves the use of advanced computing technologies to solve complex problems, while AI involves the development of algorithms and software that can mimic human intelligence to accelerate computational research by streamlining digital testing and simulations.

The convergence of HPC and AI is happening because AI applications require vast amounts of computing power and data to function effectively. HPC technologies provide the computational resources necessary to train and run complex AI models, making it possible for them to process and analyze vast amounts of data in real time.

This convergence is expected to drive significant advancements in fields such as healthcare, finance, manufacturing, aerospace and automotive, where AI applications can help improve operations, reduce costs, and accelerate innovation.

Examples of this convergence can be seen in the development of specialized hardware for AI, such as graphic process units (GPUs) and tensor processing units (TPUs), which leverage HPC technologies to accelerate the training and inference of AI models. Additionally, HPC infrastructure, such as high-speed networks and storage systems, is helping distributed AI applications that can process data across multiple nodes and data centers.

What Is Required for AI Engineering?

AI engineering often involves working with large datasets, complex models, and computationally intensive algorithms, which require high performance computing (HPC) architectures and specialized software to be effective. Here are some key components of HPC architecture and software that are typically used in AI engineering:

High Performance Computing (HPC) Cluster

A cluster of interconnected computers that work together to provide high processing power, storage capacity, and memory bandwidth for AI workloads.

GPU Accelerators

Graphics processing units (GPUs) are powerful processors that are well-suited for parallel processing, which is essential for AI workloads. GPUs are often used to accelerate deep learning algorithms, which are a key component of AI engineering.

High-Speed Interconnects

High-speed interconnects are used for fast communication between nodes in an HPC cluster. This is important for distributing workloads across the cluster and for data-intensive applications.

Distributed Storage

Distributed storage systems are used to store and manage large datasets that are required for AI workloads. These systems are designed to provide high throughput and low latency access to data.

AI Software Frameworks

AI software frameworks such as TensorFlow, PyTorch, and Caffe are commonly used in AI engineering. These frameworks provide libraries and tools for building and training AI models, as well as deploying them in production environments.

Workflow Orchestration Tools

Workflow orchestration tools are used to manage complex AI workflows that involve multiple tasks, such as data preprocessing, model training, and deployment. Examples of such tools include Apache Airflow, Kubeflow, and Argo Workflows.

Cloud Computing

Cloud computing platforms, such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud, provide access to scalable HPC resources, AI software frameworks, and workflow orchestration tools that are required for AI engineering. Cloud computing can also be used to run AI workloads at scale and to support collaborative AI projects.

Overall, the HPC architecture and software needed for AI engineering will depend on the specific requirements of the project and the available resources. However, the above components are essential for building and deploying effective AI solutions.

What Are the Four Types of AI Learning for Engineering?

Supervised Learning

In supervised learning, an algorithm is trained using labeled data, where the input data is paired with the corresponding output or target. The algorithm learns to map inputs to outputs by minimizing the difference between its predictions and the actual outputs.

Unsupervised Learning

In unsupervised learning, the algorithm is given unlabeled data and must find patterns or structure in the data without any guidance. The algorithm learns to identify relationships and correlations between the data points and group them together based on similarities.

Semi-Supervised Learning

In semi-supervised learning, the algorithm is trained on a combination of labeled and unlabeled data. The labeled data is used to guide the learning process, while the unlabeled data is used to improve the accuracy of the model.

Reinforcement Learning

In reinforcement learning, the algorithm learns by interacting with an environment and receiving rewards or punishments based on its actions. The algorithm learns to maximize its rewards by selecting the actions that lead to the best outcomes.

Understanding HPC in AI

HPC in AI refers to the use of supercomputers and parallel processing techniques to perform computationally intensive AI tasks at a much faster rate than traditional computing methods.

AI algorithms often require massive amounts of data to be processed and analyzed, which can be extremely time-consuming when done on a single processor. HPC in AI allows researchers and practitioners to scale up their computations by distributing the workload across multiple processors, allowing them to analyze vast amounts of data in a significantly shorter amount of time.

HPC in AI can be used for a variety of applications, including image and speech recognition, natural language processing, deep learning, and more. By leveraging the power of HPC, AI researchers and practitioners can develop more accurate and efficient algorithms, leading to faster innovation and discoveries.

High Performance AI

High performance AI refers to the use of advanced computing technologies and techniques to achieve faster and more efficient AI processing. This involves using specialized hardware such as graphics processing units (GPUs), field-programmable gate arrays (FPGAs), and application-specific integrated circuits (ASICs) to speed up the computations required for AI tasks such as machine learning and deep learning.

High performance AI helps researchers and practitioners develop more complex and sophisticated AI Physics models, process larger datasets, and perform more rapid and accurate predictions. High performance AI is important to a wide range of fields, from healthcare and finance to transportation and manufacturing. Additionally, high performance AI can also help to democratize access to AI, making it more available to researchers and practitioners around the world.

Will Engineers Be Replaced by AI?

While some may fear that AI will replace software engineers, it is unlikely to happen in the near future. While AI can automate certain tasks, it cannot replace the creativity, problem solving, and critical thinking skills that human engineers possess. Instead, AI and ML will likely enhance the work of engineers by automating routine tasks and providing new tools and capabilities for engineers to work with.

AI is best suited for tasks that are repetitive, data-driven, and can be automated, such as data analysis, quality control, or predictive maintenance. However, engineering involves many tasks that require human expertise and experience, such as design, innovation, and complex problem solving. These tasks require critical thinking, creativity, and a deep understanding of both the technical and practical aspects of engineering.

Moreover, AI is not capable of replacing the human interaction and communication required in many engineering projects. Collaboration and teamwork are essential in engineering, and AI is not yet advanced enough to fully replace the human element in these processes.

Generative Design in HPC

In the context of high performance computing (HPC), generative design refers to the use of computational algorithms to explore and assess complex designs for engineering and manufacturing applications.

Generative design in HPC involves using computer simulations to create and evaluate multiple design options, taking into account various factors such as material properties, structural loads, and manufacturing constraints. The approach relies on advanced algorithms and optimization techniques that allow designers to explore a much larger design space than would be possible using traditional design methods.

Generative design works by using machine learning algorithms to analyze and learn from large amounts of data, which are then used to generate new designs. This process is iterative, meaning that the algorithms refine their understanding of the problem space with each new set of designs generated.

Once the algorithm has generated a set of potential designs, the designer can review and evaluate each one based on its adherence to the design constraints and objectives. The designer can also choose to modify the design criteria, rerun the algorithm, and generate a new set of designs to explore different design options.

Generative design in HPC is particularly useful in fields such as aerospace and automotive engineering, where the optimization of complex structures can significantly improve performance and reduce weight. The technique is also becoming increasingly popular in the design of consumer products, where it can help to improve product performance and reduce manufacturing costs.

The 3 Types of Generative Design

Auto-regressive models

These models generate data by predicting the probability distribution of the next value in a sequence given the previous values. Examples of autoregressive models include language models such as GPT-3 (Generative Pre-Trained Transformer 3) and image generation models such as PixelCNN.

Variational autoencoders (VAEs)

These models use an encoder to map input data into a lower-dimensional latent space and a decoder to generate new data by sampling from the distribution in the latent space. VAEs are commonly used in image and video generation tasks.

Generative adversarial networks (GANs)

GANs consist of two neural networks, a generator and a discriminator, that are trained together in a game-like manner. The generator generates new data that aims to fool the discriminator into thinking it is real, while the discriminator tries to distinguish between real and generated data. GANs are widely used in image, video, and audio generation tasks.

Each of these generative models has its own strengths and weaknesses, and the choice of model depends on the specific application and type of data being generated.

Four Key Concepts of Generative Learning

Generative learning is an approach to learning that involves creating mental models and using them to generate new knowledge and solve problems. The four key concepts of generative learning are:

Active engagement: Generative learning involves actively engaging with the material, such as by asking questions, making connections, and generating examples. This requires a deeper level of processing than passive learning and can lead to better retention and understanding of the material.

Meaningful learning: Generative learning involves creating meaningful connections between new information and existing knowledge. This can be done by relating the new information to personal experiences, using analogies, or creating mental models.

Metacognition: Generative learning involves being aware of one’s own thinking and learning processes. This includes monitoring one’s own comprehension, identifying areas of confusion, and using strategies to overcome obstacles to learning.

Self-explanation: Generative learning involves self-explanation, or explaining the material in one’s own words. This requires actively processing the material and can help to clarify understanding and identify areas of confusion.

By incorporating these key concepts, generative learning can lead to deeper understanding and better retention of information, as well as the ability to transfer knowledge to new situations and solve problems more effectively.

Examples of Generative Design

One example of generative design is Autodesk’s Project Dreamcatcher, which is a generative design system that has been used in the design of various products and structures, including furniture, automotive components, and building structures.

For instance, in the design of a chair using Project Dreamcatcher, the designer would input the design criteria, such as the desired size and shape of the chair, the weight it should support, and the materials to be used. The algorithm would then generate thousands of potential designs that meet these criteria, and the designer could then evaluate and refine the options based on their preferences.

Another example is the design of a pedestrian bridge in Amsterdam using generative design. The architects included various parameters, such as the span of the bridge, the load-bearing capacity, and the desired aesthetics. The generative design algorithm then created multiple options for the bridge, each with different configurations of structural elements and shapes. The architects could then evaluate and select the best design option based on the project requirements.

Generative design is also used in the design of buildings to optimize structural performance and energy efficiency. For example, in the design of a skyscraper, the algorithm would generate multiple options for the building’s structure and facade, taking into account factors such as wind and solar exposure, structural stability, and energy consumption. The designers could then select the most appropriate design option based on their preferences and project requirements.

Could Generative Design Be the New Norm?

Generative design already provides substantial benefits to computational engineering and research. Those benefits include:

Efficiency Generative design can quickly generate a large number of design options that meet specific design criteria. This saves designers time and helps them explore a wider range of possibilities, leading to more efficient design processes.

Optimization Generative design can improve designs for specific performance criteria, such as structural stability, energy efficiency, or aesthetic appeal. This leads to better-performing designs that are tailored to specific needs.

Innovation Generative design allows for the exploration of new design possibilities and can lead to innovative approaches to complex design problems. It can help designers break out of traditional design paradigms and explore new ideas.

Sustainability Generative design can help designers create more sustainable designs by optimizing for energy efficiency, material use, and environmental impact.

Personalization Generative design can be used to create customized designs that meet the specific needs and preferences of individual users, such as personalized medical devices or custom-fit clothing.

Collaboration Generative design can facilitate collaboration between designers, engineers, and other stakeholders by providing a common platform for exploring design possibilities and evaluating options.

Overall, generative design is a powerful tool that enables designers to create more efficient, optimized, and innovative designs that better meet the needs of users and stakeholders. As technology continues to advance, generative design is expected to become increasingly prevalent in various fields, including architecture, product design, and engineering.

Physics Informed Neural Networks: Understanding Their Advantages, Learning Methods, and Applications

Physics informed neural networks, commonly referred to as PINNs, are a class of machine learning algorithms that combine deep neural networks with physical principles to enhance the accuracy and robustness of the predictions. These algorithms have gained significant attention in recent years, owing to their ability to integrate physics-based constraints into the training process, leading to enhanced generalization and improved predictive performance.

The Different Use Cases for Physics Informed Neural Networks

Physics informed neural networks are used in various fields, including fluid dynamics, mechanics, quantum physics, and many more. These networks can be used to solve complex differential equations that arise in physics-based problems, such as modeling the dynamics of fluids and predicting the behavior of materials under various conditions. PINNs can also be used for optimization, control, and inverse problems, such as identifying the parameters of a physical system from its observed behavior.

Understanding the Physics Informed Neural Network Theory

The theory behind physics informed neural networks involves integrating physics-based constraints into the training process. PINNs are typically composed of two parts: a neural network that predicts the output of a physical system, and a residual network that represents the physics-based constraints. By combining these two parts, PINNs can learn the underlying physical relationships and use them to make accurate predictions. The residual network also acts as a regularization term, preventing overfitting and improving the generalization of the network.

The 3 Learning Methods of Neural Networks

The three most common learning methods of neural networks are supervised learning, unsupervised learning, and reinforcement learning.

In supervised learning, the network is trained on labeled data, where the correct output is known. In unsupervised learning, the network is trained on unlabeled data, and it is up to the network to find patterns and structure in the data. Reinforcement learning involves training a network to take actions in an environment to maximize a reward signal.

The Advantage of Physics Informed Neural Networks

The main advantage of PINNs is their ability to incorporate physical principles into the learning process, leading to more accurate and robust predictions. This is particularly useful when working with complex physical systems where data may be limited or noisy. PINNs can also handle high-dimensional data, making them suitable for a wide range of applications. Moreover, they can be trained on a limited dataset, which is often the case in many physical systems.

Can Physics Informed Neural Networks Be Accurate?

Physics informed neural networks have been shown to be highly accurate in a variety of applications. In many cases, they outperform traditional numerical methods, such as finite element analysis (FEA) or computational fluid dynamics (CFD). However, their accuracy depends on the quality of the physical model and the available data. Therefore, it is essential to carefully validate the model and ensure that the data is representative of the physical system.

Physics Informed Neural Networks On Specific Applications

Python and PINNs

Python is a popular programming language used for machine learning and scientific computing. There are several libraries available in Python for building physics informed neural networks, including TensorFlow, PyTorch, and Keras. These libraries provide a range of tools for building and training neural networks, as well as for integrating physical constraints into the learning process.

How Does Tesla Use Neural Networks?

Tesla uses neural networks in various aspects of its products, including their autonomous driving technology, predictive maintenance, and energy products.

Autonomous Driving Technology

Tesla’s Autopilot system uses a deep neural network to process data from the car’s sensors and make driving decisions. The neural network analyzes the data from the car’s cameras, radar, and ultrasonic sensors to detect obstacles, pedestrians, and other vehicles. It can also identify road markings, traffic signs, and traffic lights. This technology is constantly evolving, and Tesla is continuously improving the neural network to make it safer and more reliable.

Predictive Maintenance

Tesla also uses neural networks to predict when maintenance is needed on their vehicles. The neural network analyzes data from the car’s sensors, such as battery voltage, temperature, and charging patterns, to predict when a component may fail or require maintenance. This helps Tesla to proactively address issues before they become more serious, reducing downtime for the owner and improving overall reliability.

Energy Products

Tesla’s energy products, such as the Powerwall and Powerpack, also use neural networks to optimize energy usage. The neural network analyzes data from the energy system, such as solar panel output, energy usage patterns, and weather forecasts, to predict how much energy will be needed and when. This allows the energy system to operate more efficiently, reducing costs for the owner and improving overall energy sustainability.

The Promise of Physics Informed Neural Networks

The future of PINNs looks very promising. As the field of artificial intelligence continues to evolve, the integration of physics-based constraints into machine learning algorithms is becoming increasingly important. Here are some potential directions for the future of PINNs:

Improved accuracy: As the datasets used for training PINNs become larger and more diverse, the accuracy of the models is likely to continue to improve. This will be particularly important for complex physical systems where accurate predictions are critical.

Faster computations: As the field of deep learning continues to advance, new architectures and optimization methods will be developed that can make PINNs faster and more efficient. This will be particularly important for real-time applications, such as autonomous driving, where computations must be performed in real time.

More applications: As researchers continue to explore the capabilities of PINNs, new applications are likely to emerge. These could include applications in fields such as astrophysics, quantum mechanics, and climate science, where accurate predictions are critical for understanding complex physical phenomena.

Integration with other machine learning techniques: PINNs could be combined with other machine learning techniques, such as reinforcement learning or unsupervised learning, to create more powerful models. This could help PINNs tackle even more complex physical systems and problems.

Overall, the future of physics informed neural networks is promising, and it is likely that these algorithms will play an increasingly important role in helping computational research and engineering become even more effective.

AI Simplified for Computational Science & Engineering

Rescale makes it fast and easy for research and engineering teams to tap into the power of AI for accelerating scientific discoveries and design breakthroughs.

To learn more about how Rescale provides the high performance computing necessary for AI-driven innovation, please contact us to speak with one of our HPC experts today.